Prefer to listen instead? Here’s the podcast version of this article.

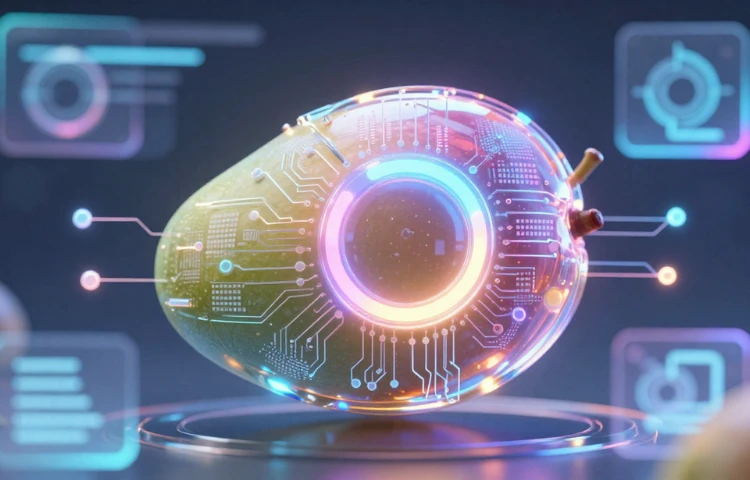

Meta — the company formerly known purely for social networking — is poised to make a major leap in the artificial intelligence landscape with its next‑generation multimodal AI model, code‑named “Mango”. Set to debut in the first half of 2026, Mango is Meta’s strategic push into advanced image and video generation, designed to elevate visual content creation and rival industry heavyweights like Google and OpenAI.

Mango is an artificial intelligence model under development by Meta Platforms Inc., with a focus on high‑fidelity image and video generation. Unlike text‑only models, Mango aims to push the boundaries of visual content creation — enabling generation and manipulation of both static and moving images based on natural language instructions. [LinkedIn]

This marks a key pivot for Meta from earlier tools like Make‑A‑Video to a more powerful, proprietary AI powerhouse capable of producing longer, more coherent video sequences that might integrate multimodal “world understanding” — meaning models that grasp environmental context and object dynamics rather than just generating frame after frame.

According to internal reports, Meta plans to launch Mango in early 2026, positioning it alongside a companion text‑oriented model called “Avocado” — a powerful large‑language model optimized for code and planning tasks.

This strategy reflects a broader industry shift toward multimodal AI (AI that understands and generates across text, image, and video formats). If you want a primer on why multimodal AI is becoming central to the future of AI technology, TechCrunch’s coverage of emerging models and their impact on industries is an excellent resource.

The race to power image and video generation comes as users and creators increasingly demand richer content. Competitors like Google’s Gemini Nano Banana have already made waves for their high‑quality AI image outputs. Meanwhile, platforms like Runway Gen‑2 continue to evolve text‑to‑video tools for mainstream audiences.

Meta’s Mango — if it delivers on promises — could significantly elevate what visual AI can do, especially if it succeeds in producing coherent, context‑aware videos from basic text prompts. For more insights into how video AI models are shaping the future of media creation, check out this comprehensive overview from The Verge on the evolution of text‑to‑video technology.

While Meta has not released detailed technical specs yet, industry sources suggest Mango may support:

These capabilities could make Mango especially attractive to a broad spectrum of users — from social media creators to professional video editors.

Mango isn’t coming out of nowhere. It’s part of Meta’s broader AI ecosystem which includes:

Meta’s shift from releasing open models like LLaMA to building more proprietary systems (like Mango) highlights a strategic change toward commercially competitive AI technologies. Curious how this compares to other AI company strategies? See this detailed comparison of AI platforms across the industry by AI Trends.

Mango’s introduction could have wide implications:

For real‑world examples of how AI is already revolutionizing creative workflows, the Adobe Firefly blog offers a wealth of context on integrating AI into day‑to‑day creative production.

Meta’s Mango will enter a highly competitive space. Already, tools from OpenAI, Google, and Adobe are attracting massive attention — meaning user adoption will depend on quality, integration, and accessibility.

Moreover, developing reliable long‑form video generation that feels natural and cohesive is still a technological challenge for all players in the field. This means Mango will need to prove itself on ease of use, flexibility, and output quality to make a real difference.

Meta’s Mango represents a bold stride into the future of generative AI. With the promise of advanced image and video capabilities, it opens fresh possibilities for creators, brands, and developers looking to harness AI for richer visual expression. As the first half of 2026 approaches, the AI community — and content creators — should keep close watch on this unfolding technology.

WEBINAR